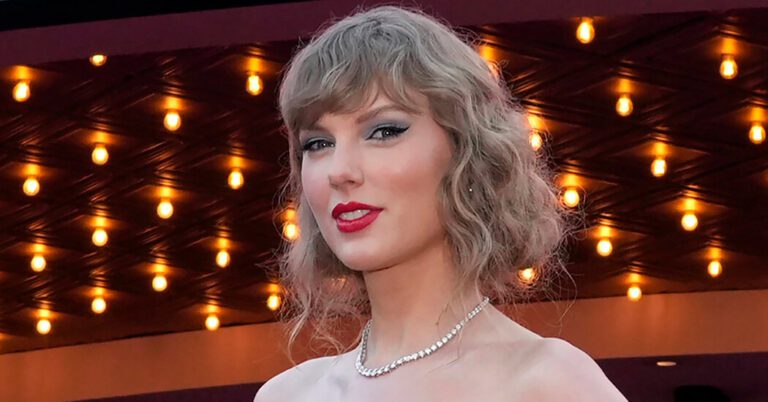

Pretend, sexually express photos of Taylor Swift probably generated by synthetic intelligence unfold quickly throughout social media platforms this week, disturbing followers who noticed them and reigniting calls from lawmakers to guard girls and crack down on the platforms and expertise that unfold such photos.

One picture shared by a person on X, previously Twitter, was considered 47 million instances earlier than the account was suspended on Thursday. X suspended a number of accounts that posted the faked photos of Ms. Swift, however the photos had been shared on different social media platforms and continued to unfold regardless of these firms’ efforts to take away them.

Whereas X mentioned it was working to take away the photographs, followers of the pop famous person flooded the platform in protest. They posted associated key phrases, together with the sentence “Shield Taylor Swift,” in an effort to drown out the specific photos and make them tougher to search out.

Actuality Defender, a cybersecurity firm targeted on detecting A.I., decided with 90 p.c confidence that the photographs had been created utilizing a diffusion mannequin, an A.I.-driven expertise accessible via greater than 100,000 apps and publicly accessible fashions, mentioned Ben Colman, the corporate’s co-founder and chief govt.

Because the A.I. business has boomed, firms have raced to launch instruments that allow customers to create photos, movies, textual content and audio recordings with easy prompts. The A.I. instruments are wildly widespread however have made it simpler and cheaper than ever to create so-called deepfakes, which painting folks doing or saying issues they’ve by no means accomplished.

Researchers now worry that deepfakes have gotten a strong disinformation pressure, enabling on a regular basis web customers to create nonconsensual nude photos or embarrassing portrayals of political candidates. Synthetic intelligence was used to create faux robocalls of President Biden throughout the New Hampshire main, and Ms. Swift was featured this month in deepfake adverts hawking cookware.

“It’s all the time been a darkish undercurrent of the web, nonconsensual pornography of assorted types,” mentioned Oren Etzioni, a pc science professor on the College of Washington who works on deepfake detection. “Now it’s a brand new pressure of it that’s notably noxious.”

“We’re going to see a tsunami of those A.I.-generated express photos. The individuals who generated this see this as successful,” Mr. Etzioni mentioned.

X mentioned it had a zero-tolerance coverage towards the content material. “Our groups are actively eradicating all recognized photos and taking applicable actions in opposition to the accounts chargeable for posting them,” a consultant mentioned in an announcement. “We’re carefully monitoring the scenario to make sure that any additional violations are instantly addressed, and the content material is eliminated.”

X has seen a rise in problematic content material together with harassment, disinformation and hate speech since Elon Musk purchased the service in 2022. He has loosened the web site’s content material guidelines and fired, laid off or accepted the resignations of employees members who labored to take away such content material. The platform additionally reinstated accounts that had been beforehand banned for violating guidelines.

Though most of the firms that produce generative A.I. instruments ban their customers from creating express imagery, folks discover methods to interrupt the principles. “It’s an arms race, and it appears that evidently at any time when anyone comes up with a guardrail, another person figures out methods to jailbreak,” Mr. Etzioni mentioned.

The photographs originated in a channel on the messaging app Telegram that’s devoted to producing such photos, based on 404 Media, a expertise information web site. However the deepfakes garnered broad consideration after being posted on X and different social media providers, the place they unfold quickly.

Some states have restricted pornographic and political deepfakes. However the restrictions haven’t had a robust affect, and there aren’t any federal laws of such deepfakes, Mr. Colman mentioned. Platforms have tried to deal with deepfakes by asking customers to report them, however that methodology has not labored, he added. By the point they’re flagged, thousands and thousands of customers have already seen them.

“The toothpaste is already out of the tube,” he mentioned.

Ms. Swift’s publicist, Tree Paine, didn’t instantly reply to requests for remark late Thursday.

The deepfakes of Ms. Swift prompted renewed requires motion from lawmakers. Consultant Joe Morelle, a Democrat from New York who launched a invoice final 12 months that may make sharing such photos a federal crime, mentioned on X that the unfold of the photographs was “appalling,” including: “It’s occurring to girls all over the place, day-after-day.”

“I’ve repeatedly warned that AI could possibly be used to generate non-consensual intimate imagery,” Senator Mark Warner, a Democrat from Virginia and chairman of the Senate Intelligence Committee, mentioned of the photographs on X. “It is a deplorable scenario.”

Consultant Yvette D. Clarke, a Democrat from New York, mentioned that developments in synthetic intelligence had made creating deepfakes simpler and cheaper.

“What’s occurred to Taylor Swift is nothing new,” she mentioned.